The Thought Process

I knew that the next step for my lab was to go full cluster. I work on Kubernetes every day on the job, everything I'm running at home is containerized, why not run it clustered at home? Well, two main things were standing in my way:

Cost (cluster means more machines)

Bare metal clusters? When was the last time I touched those?

99.9% of the time I'm working with a Kubernetes cluster, it's a managed service living somewhere else. I don't necessarily need to dig into the weeds with things like CNI setup, control plane availability, and node-to-node connectivity. These are all handled for you to an extent by the major managed offerings like EKS, AKS, and GKE. I tell Terraform what to make, it builds it, and everything "just works".

Honestly, I have probably gotten lazy with the inner workings of cluster bootstrapping in the last couple of years because of just how automated things are. This is great for day-to-day administration, but if things get hairy, you want to make sure you know all the vital components of the architecture you're running. What's going on with the scheduler? Is my control plane Highly Available? What CNI should I be using and why? I wanted to use this as a way to refamiliarize myself with some of the inner workings of Kubernetes and do it in a enviornment that isn't costing a customer revenue if I mess up.

Existing Hardware

This is an upgrade, which means at the end of the day, there's existing hardware I'm already using. As I stated in my last post, my latest upgrade before this was transitioning my home server into a rack mount case, and upgrading the CPU to a 12600K to allow for Intel Quicksync transcoding with Plex. I try to Direct Play my content as much as possible, but my Parents back in Pittsburgh have a measly 20Mbps download speed which makes things difficult for them. Having the ability to use hardware transcoding for streaming to them has reduced the load significantly on my server and now there's no looking back.

Now the main decision was how to move forward. Do I virtualize? I have plenty of co-workers who do that now and it seems to work well (Hi Clifford). I could use Proxmox or vSphere on my existing machine and set the nodes up as VMs, cluster them together, and voila! I almost did. It would reduce the cost at the end of the day and transition all upgrades to software with whatever hypervisor I decided to go with. However, I eventually decided against virtualization and went with a bare metal cluster of multiple physical nodes.

Why? I wanted to buy new stuff of course! In reality, it boiled down to what I said before, I want to use this cluster as a way to learn more. I wanted to hone my skills more on the 0.1% of things that don't fall under my "standard" cloud use case at work. Virtualization definitely would have taught me new things, but I felt that a bare metal cluster had more to offer. What if just one of my nodes goes down? Can I make it highly available and fault tolerant? If I virtualized, everything dies when my one node dies. Again, the entire purpose of a lab is to experiment and learn, and what better way to do that than get my hands dirty on all the aspects of provisioning a physical cluster? ....and because I wanted to buy new stuff of course.

So now I needed to decide what to do with the existing machine. This was easy enough as all my storage is housed here and I need the new cluster to be able to access the existing ZFS pool. There were two thoughts that I had:

Transition this to a large worker node

Just use it as an NFS server

I wanted my master and worker nodes to be fairly uniform, so I decided against adding this as a node and I turned the existing machine into a TrueNas storage appliance. This allowed me to share the existing storage I've had over NFS which Kubernetes volumes can natively connect to, making my life a whole lot easier. With TrueNas I also got the added feature of baked-in S3-compatible storage with Minio. This allows me to backup my Persistent Volumes back to the TrueNas server, but we'll cover that more in my storage writeup.

The new Hardware

The most fun part of any upgrade begins....what shiny new toy do I get to buy? I love consumer tech and any excuse I get to buy something new is fun for me, but any time I sink some money into improvements I want to make sure I'm making practical decisions on what I already have, and what I'm looking to improve with my current setup.

After some research, I landed on the Intel NUC 11 devices to be used as my cluster nodes. They aren't the most recent NUC offerings, but they had everything I needed, and would let me save a couple hundred dollars per node than just buying the newest NUC 12 or even 13 that was recently released. These small form factor PCs pack a mighty punch with Intel Quicksync, a 2.5Gb onboard NIC, and room for both a SATA and PCIe 4.0 NVME drive.

Below is my planned strategy:

| Device | OS Disk Size | Data Disk Size | RAM | Operating System | Purpose |

| NUC11PAHi5 x 3 | 250GB NVME | - | 32GB DDR4 | Ubuntu | Kubernetes Master Nodes |

| NUC11PAHi7 x 3 | 250GB SATA | 4TB NVME | 64GB DDR4 | Ubuntu | Kubernetes Worker Nodes |

I purchased one of each to start with and began setting them up, knowing that I could add more master and worker nodes in the future as I needed/wanted. The master nodes are using 32GB of RAM and a single 250GB NVME drive as the OS drive while the worker nodes are getting a beefier 64GB of RAM and both a 250GB SATA drive for the OS and a 4TB NVME PCIe 4.0 data drive. The data drive will house the persistent storage for all stateful workloads, through either Rook-Ceph or Longhorn which I'll detail later (spoiler alert, it's Longhorn).

The eventual goal is 3 of each which will allow for a HA setup for the control plane. Is it complete overkill? For sure, but that's half the fun (:

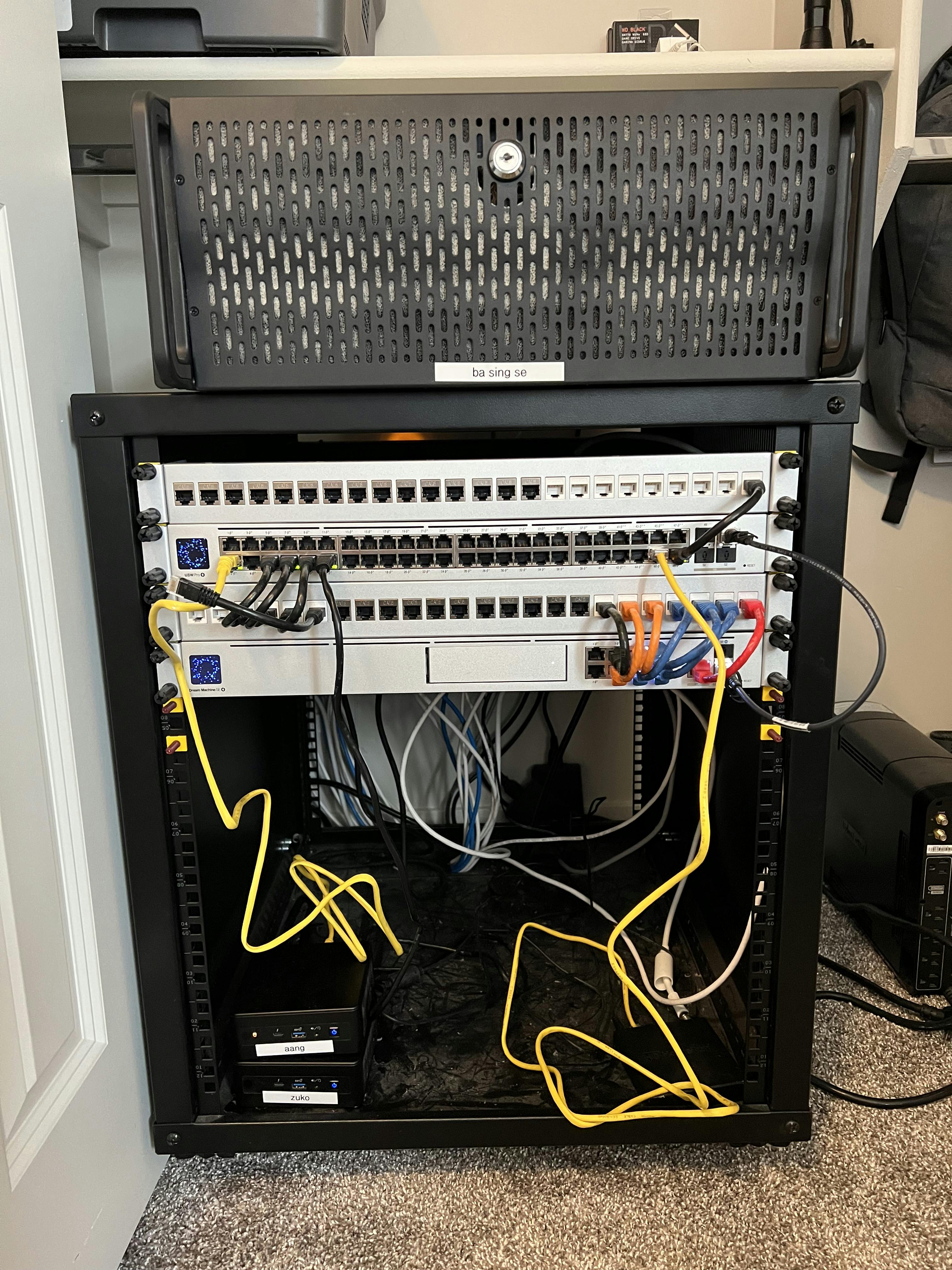

So we have the old hardware repurposed, the new hardware purchased and built, and all of it integrated into the rack. Here's how it all turned out (please ignore the ugly cables):

I love Avatar the Last Airbender as you can tell....but everything is up and running!

Future Considerations

Below are some of the additional hardware ideas I have for the future:

HA Control plane by adding 2 more master nodes

Coral AI USB Accelerator for Home Video Recording (Frigate)

Pi-KVM and Network KVM setup to manage all nodes remotely

UPS backup

Feel free to comment your setup or any questions and I'd be glad to answer anything! Next up, we'll look at the bootstrapping process to get these nodes joined into a cluster in an automated fashion!